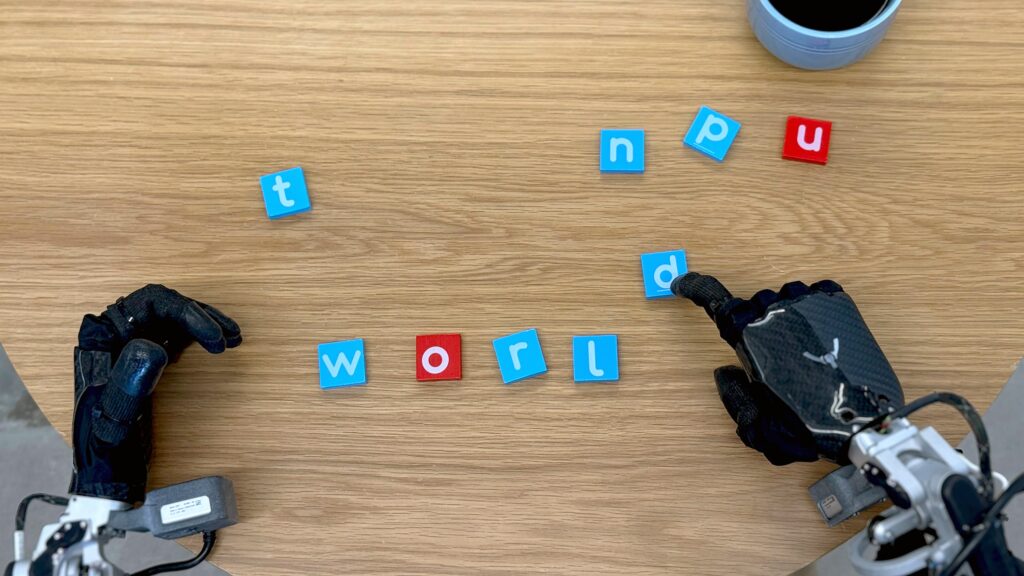

Google DeepMind has taken a significant leap in artificial intelligence-driven robotics with the introduction of two new AI models: Gemini Robotics and Gemini Robotics-ER. These advanced models are designed to redefine how robots perceive, analyze, and interact with the physical world, enhancing their adaptability and efficiency in real-time scenarios.

Gemini Robotics: Vision-Language-Action Integration

The Gemini Robotics model integrates vision, language, and action capabilities, allowing robots to process complex environmental cues and execute corresponding actions with increased precision. Unlike traditional robotic AI models that rely on static programming, Gemini Robotics continuously observes its surroundings, interprets information, and dynamically adjusts its behavior.

Key advancements include:

- Enhanced Generalization: Gemini Robotics has demonstrated twice the performance efficiency in generalization tasks compared to existing AI models.

- Real-Time Adaptation: The model can react to changes in its environment instantly, improving response times and reducing errors in execution.

- Intuitive Task Management: With its advanced vision-language-action framework, Gemini Robotics enables machines to understand and perform instructions with natural human-like comprehension.

Gemini Robotics-ER: Mastering Spatial Reasoning

While Gemini Robotics excels in multi-modal understanding, Gemini Robotics-ER focuses on spatial reasoning—a critical component in robotic dexterity and autonomous navigation. This model has been engineered to enhance robots’ ability to grasp objects, calculate movement strategies, and optimize their physical interactions with the environment.

Notable breakthroughs of Gemini Robotics-ER:

- Autonomous Grip and Motion Planning: The AI autonomously determines the best strategies for gripping and maneuvering objects.

- Superior Control Efficiency: It exhibits a 2x-3x improvement over Gemini 2.0 in robotic control tasks, drastically reducing failure rates in complex movements.

- Advanced Learning Mechanisms: By processing vast amounts of spatial data, the model refines its understanding of object placement, orientation, and interaction.

ASIMOV Dataset: Setting a New Standard for Robotic Safety

In addition to the unveiling of the Gemini Robotics models, DeepMind is also introducing ASIMOV, a new dataset designed to assess and mitigate robotic safety risks. This dataset will be instrumental in training AI systems to operate safely in dynamic and unpredictable environments, reducing potential hazards associated with autonomous robots.

Implications for the Future of Robotics

The introduction of Gemini Robotics and Gemini Robotics-ER marks a major step forward in AI-powered robotics. These models pave the way for more intuitive, adaptable, and intelligent robotic systems that could revolutionize industries such as manufacturing, healthcare, logistics, and personal assistance.

With the combination of enhanced real-time processing, superior spatial reasoning, and improved safety assessments, Google DeepMind is shaping the future of AI-driven automation. The coming years will likely see these technologies integrated into a broad range of applications, making robots more efficient partners in both industrial and everyday settings.

As these advancements continue to evolve, one thing is certain—Google’s latest innovation is set to push the boundaries of what robots can achieve in the real world.